Paramus INTENT

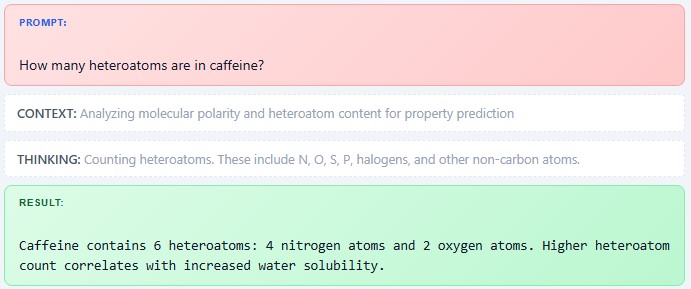

Large language models are excellent at explaining science, but they do not do science on their own. Without real calculations and models, their answers remain plausible text, not validated results.

Trust Comes from Models, Not Language

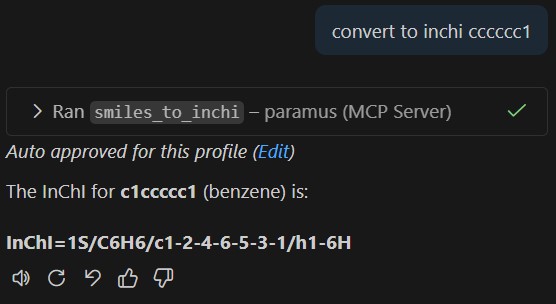

LLMs Become Scientific When Grounded in Computation

A model and calculation base transforms an LLM from a chatbot into a scientific assistant. It ensures that every answer is grounded in real chemistry, physics, and data rather than linguistic patterns alone.

Scientific value emerges when LLMs are connected to computational models that produce numbers, structures, and predictions. Calculations turn suggestions into measurable outcomes that can be checked and trusted.