Tool Triage: When 1000+ Functions Compete for Your Prompt

We’ve crossed a threshold. Our platform now hosts over 1,000 functions—or “tools” in MCP parlance—and we’re facing a new kind of problem: functional overlap. Multiple software packages now deliver the same type of calculation. So what do we do when five different solvers can answer the same question?

Welcome to Tool Triage.

The Selection Challenge

When an AI agent needs to perform a calculation, it now has choices. The selection criteria we’re working with:

- Calculation speed – How fast can it deliver results?

- License type – Open source? Commercial? Restrictive clauses?

- Container stability – How reliable is the deployment?

Our analysis reveals significant functional overlap across computational backends:

| Domain | Overlapping Tools |

|---|---|

| Thermodynamics | CoolProp vs Cantera |

| Quantum Chemistry | ORCA vs PSI4 vs GAMESS |

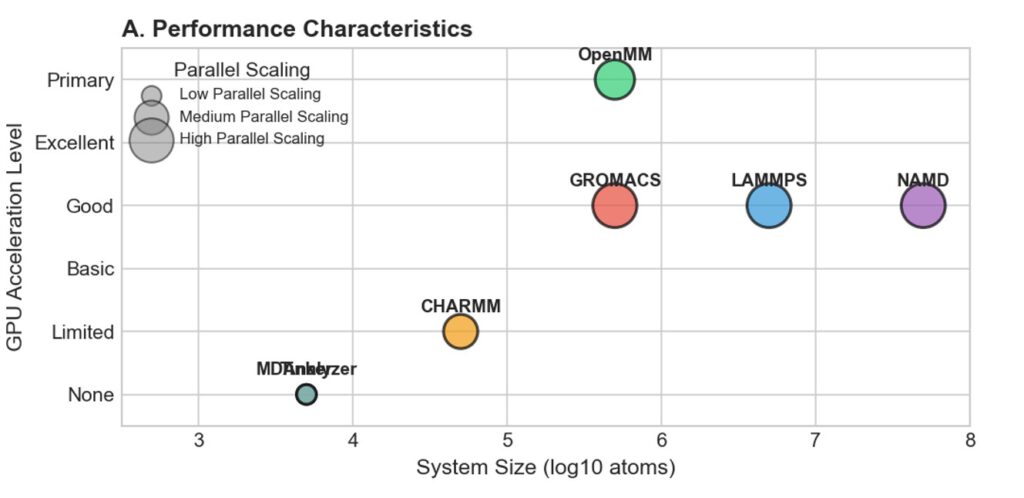

| Molecular Dynamics | GROMACS vs LAMMPS vs OpenMM |

In theory, this gives users flexibility to choose the most appropriate tool based on accuracy requirements, computational cost, or available licenses.

In practice? It’s far more complicated.

The Devil in the Details

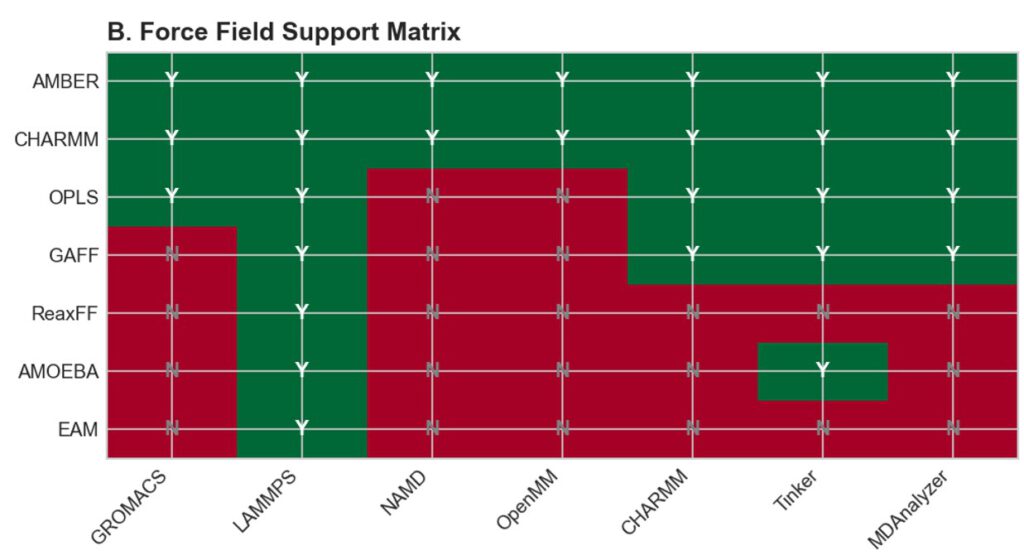

Force Fields Are Not Created Equal

Consider energy minimization. Using AMBER vs CHARMM vs OPLS will produce different final energies and geometries. They’re solving the same problem with fundamentally different assumptions.

And that’s before we even mention polarizable force fields. Tools like Tinker and MDAnalyzer support AMOEBA—which represents fundamentally different physics than fixed-charge models.

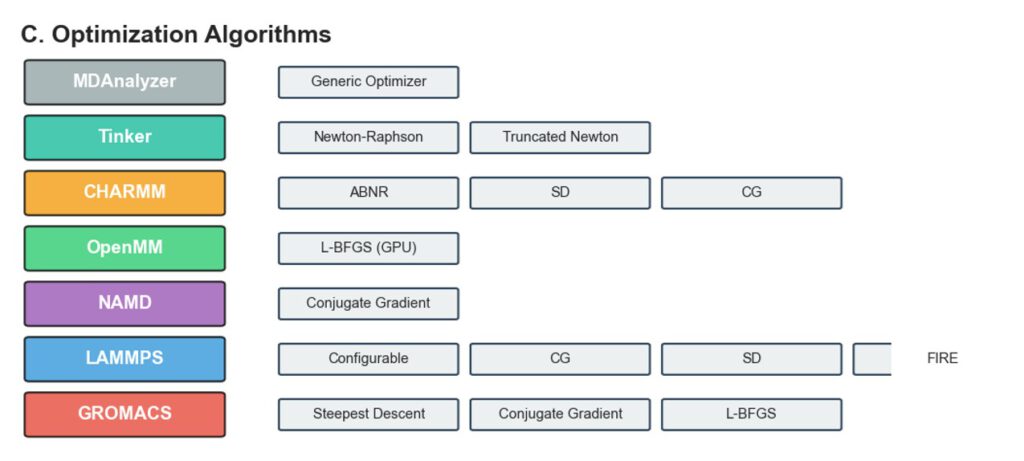

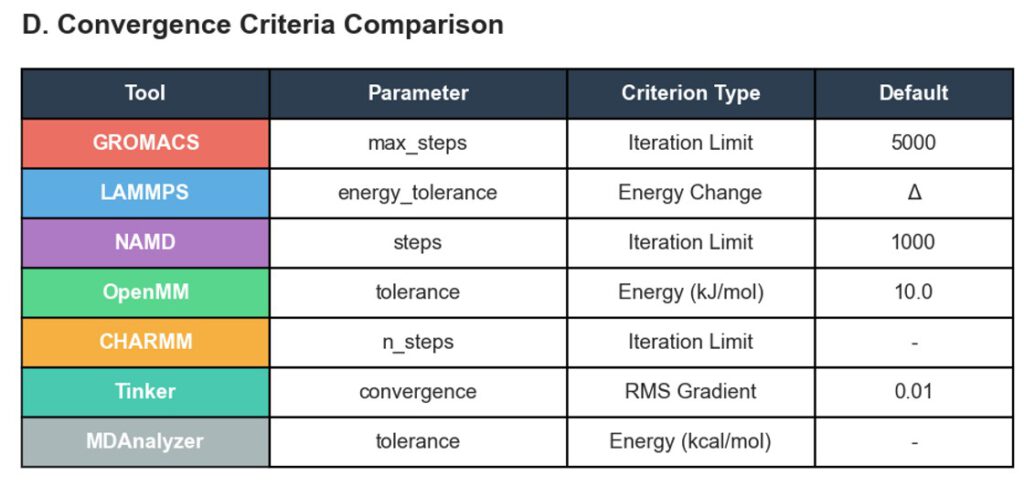

Convergence Criteria: Apples to Oranges

Each tool defines “done” differently:

| Tool | Convergence Metric |

|---|---|

| GROMACS | max_steps (iteration limit) |

| LAMMPS | energy_tolerance (energy change) |

| OpenMM | tolerance in kJ/mol |

| Tinker | convergence (RMS gradient) |

These are not equivalent stopping conditions. A calculation that converges in GROMACS might not meet Tinker’s gradient criteria—or vice versa.

The Missing Map

This raises a critical question: Has anyone created a horizontal comparison map across these computational tools? A systematic cross-reference that shows:

- Which outputs are truly equivalent?

- What parameter translations are needed?

- Where do the physics diverge fundamentally?

We haven’t found one. And that’s either a gap in the ecosystem—or an opportunity.

Your Turn

We’re building this triage system because we believe intelligent tool selection will become essential as the computational ecosystem grows. But we’re also aware we might be missing something obvious.

Are we onto something valuable here, or are we reinventing a wheel that already exists?

If you’ve tackled this problem, or know of existing comparison frameworks, we’d love to hear from you. The community benefits when we map this territory together.

Building the infrastructure for multi-tool AI orchestration, one triage decision at a time.