Technology

Paramus need a advanced level of digital transformation

If you need assistance with an assessment, we can evaluate your current maturity level (consulting) and recommend next steps to move forward in your digital journey.

Architecture

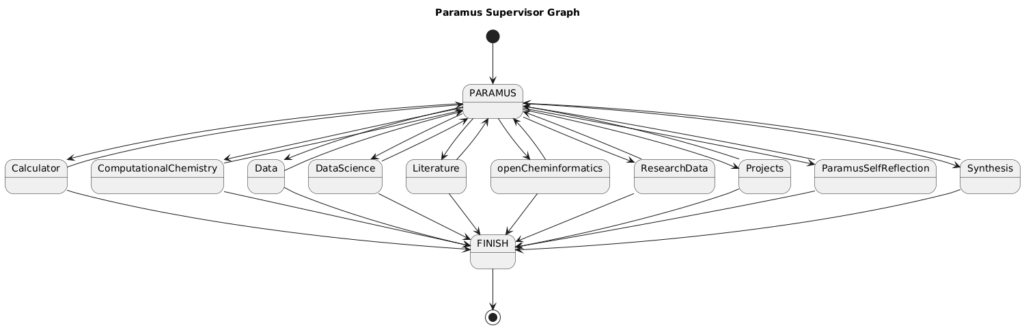

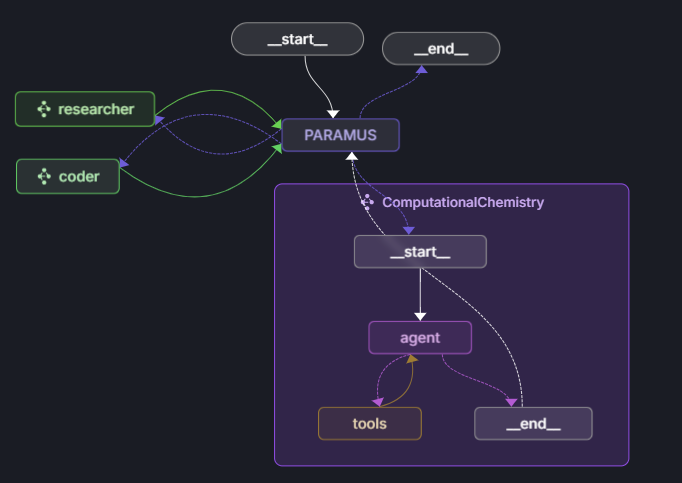

The current version has a Router / Multi Agent layout. PARAMUS uses LangGraph. All Paramus-internal agents are ReAct type.

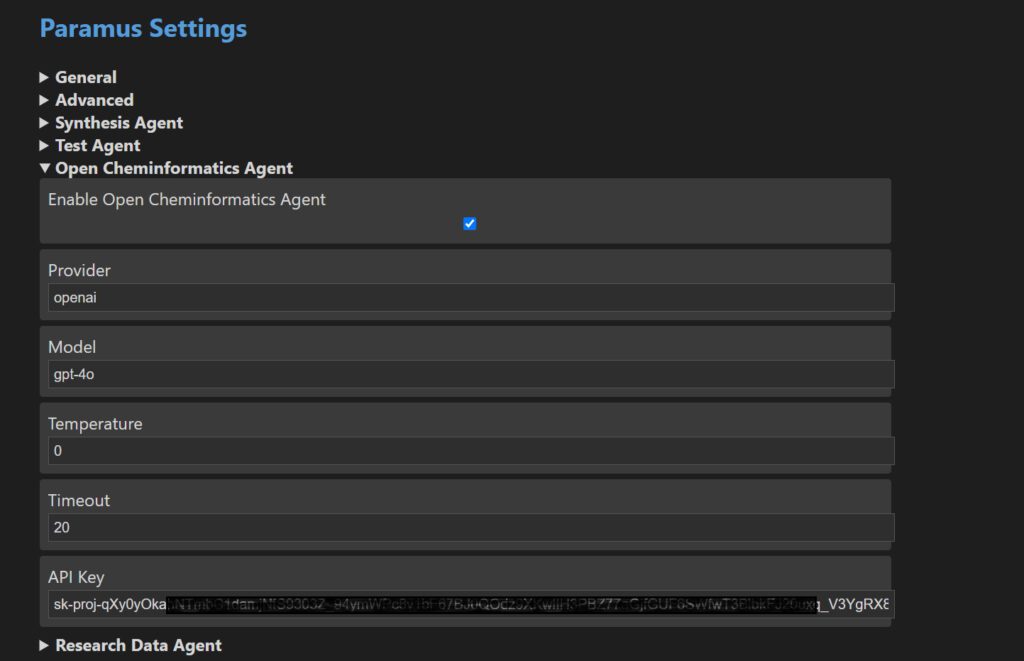

Run on different LLM providers

The Copilot as well as the agents require an LLM runtimes subscription (e.g., an OpenAI API key). This is easy to set up. These subscriptions are easy to configure and are billed separately from PARAMUS. This modular approach allows you to manage costs flexibly while scaling computational power and expertise across different domains according to your specific requirements.

Mix them up for a well balanced system

You should configure different LLM runtimes for different agents! Just like your team members have different skills (and salaries). This is just as comparable: By assigning agents to providers differently, we have achieved incredible results in optimizing the performance of PARAMUS (as a whole system) in terms of response time, response quality and total costs.

For example: run the Calculator agent with non-critical, cheap but VERY FAST(!) xAI-Grok2 and run in contrast the Copilot with a openAI-GPT4o. To our experience the Copilot should run with the best model you can effort. Simple agents like the literature agent can run “more stupid”.

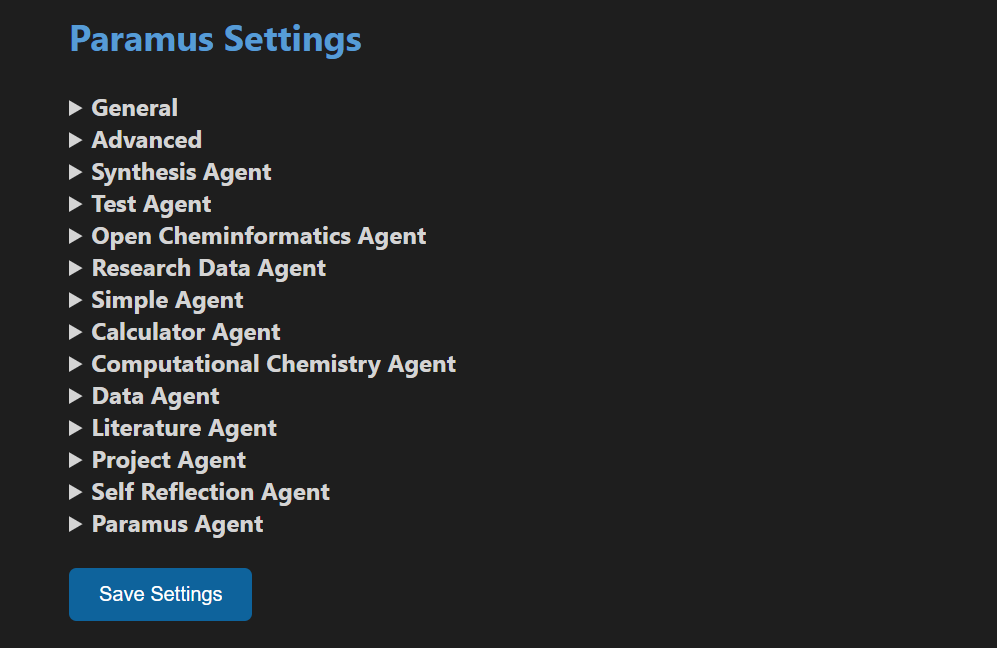

Settings dialog

Setup of a LLM connection

Runtime LLMs available:

- OpenAI

- Google Gemini

- XAI

- Anthropic

There are already LLMs with agent infrastructures. So why PARAMUS?

(1) Ecosystem Architecture: Paramus Copilot is a comprehensive ecosystem—comprising connectors, agents, and interfaces—integrated through orchestration, not a single agent.

(2) Scientifically Driven: Developed by scientists with a research-centric perspective.

(3) Industry Expertise: Designed with input from practicing industrial chemists; unlike generic LLM agents, it addresses the complexity of chemistry beyond generic NLP tasks.

(4) Answer Quality via Knowledge Graphs: High agent performance and domain-specific accuracy are enabled by integrated Knowledge Graph technology. (see Knowledge Graph)

(5) Private LLM Runtime: Responsiveness depends solely on your dedicated LLM subscription—no shared infrastructure delays.

(6) Cross-Platform Simplicity: Installation and setup are streamlined for both Windows and macOS.

(7) Cloud Vendor Independence: No lock-in to specific cloud service providers.

(8) Rapid Innovation Cycle: PARAMUS evolves with the fast-paced agentic ecosystem; biannual releases (March ACS Spring, and August ACS Fall) ensure up-to-date functionality and accelerated deployment.

(9) Data Privacy & IP Protection: Designed with strict data governance—your data remains on your private operating system, ensuring control over intellectual property and compliance.

SECURE by design

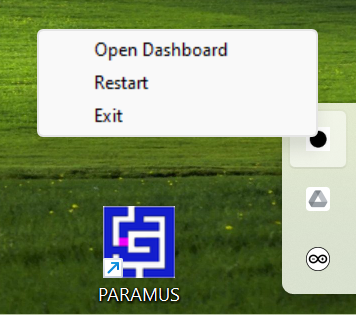

PARAMUS operates as a local background service with a web-based browser interface.

Implemented in Python, it is distributed as PARAMUS.exe for Windows and as a native application for macOS.

Safety philosophy

Every user has his own PARAMUS. No shared environments – this is by design! The reason is, that PARAMUS is your personal assistant, really powerful and so need to know the access to the systems on your behalf. Shared environments may be safe meanwhile … but still not enough for us to trust.

Do you offer agents without the whole infrastructure?

Each agent is LangGraph-compatible, so basically we can do it. But you will loose the power of the whole PARAMUS graph, the End-to-End Value vs. Single-Use Component. If you integrate an agent you need to figure out the “who calls what, when, and how?”. You also have to manage the meta-logic of chaining multiple agents, handle edge cases, and state management:

- PARAMUS decides routing and sets the

nextagent - Agents execute and provide outputs without changing routing

- Each agent has clear responsibility for specific states

In our current version we use a ‘supervisor-worker’ architecture for PARAMUS Core.

In a system like PARAMUS, each agent might be specialized for a certain domain (e.g., experiments/ELN, time series/reactors, synthesis route handling and call for prediction tools etc.). Because they’re embedded in a larger framework, each agent can pass information to other agents, but only via the supervisor.

When multiple agents work under a shared framework, logs, user feedback, and performance metrics can be aggregated across tasks. This consolidated feedback can improve each agent over time and help the orchestrator (PARAMUS) learn (agent) routing strategies or identify bottlenecks.

Insight: Copilot Agent Architecture

- PARAMUS correctly routes to specific agents

- Verified direct finish execution path

- Ensured message context is maintained through routing

- Multi-step agent processing tested

- Error handling works properly

- Validated state integrity across node transitions

Key Success Factors:

- Clean State Management: Each agent manages its own state fields without conflicts

- Proper Graph Architecture: Matches the actual supervisor’s routing pattern

- Isolated Testing: Tests focus on specific behaviors without complex dependencies

- Error Handling: Confirms exceptions propagate correctly from the actual PARAMUS node

PARAMUS as meta-agent

From the outside, a customer or client application just sees a single interface (the “PARAMUS agent”). Internally, PARAMUS handle the sub-agents. You do not need to wire all these sub-agents yourself; with python simply pip install paramus and call paramus.runTask() endpoint, for example, and let the PARAMUS chemist figure out everything else.